⭕️ Building Robust Sanitization Pipelines

Preserving data integrity when faced with ambiguous data

Like many startups, here at Athelas we process a broad spectrum of customer data every day. And this abundance of data comes with a proportional level of responsibility. Maintaining data integrity is of utmost importance, particularly in the healthcare space, where accurate data is necessary to deliver the highest standard of care. Moreover, since any data-driven decision is only as accurate as the data it’s based on, clean data is key to making well-informed business decisions.

How do we clean our raw data, collected from disparate sources, so that it remains consistent and accurate within our own organizations? Let’s dive into it!

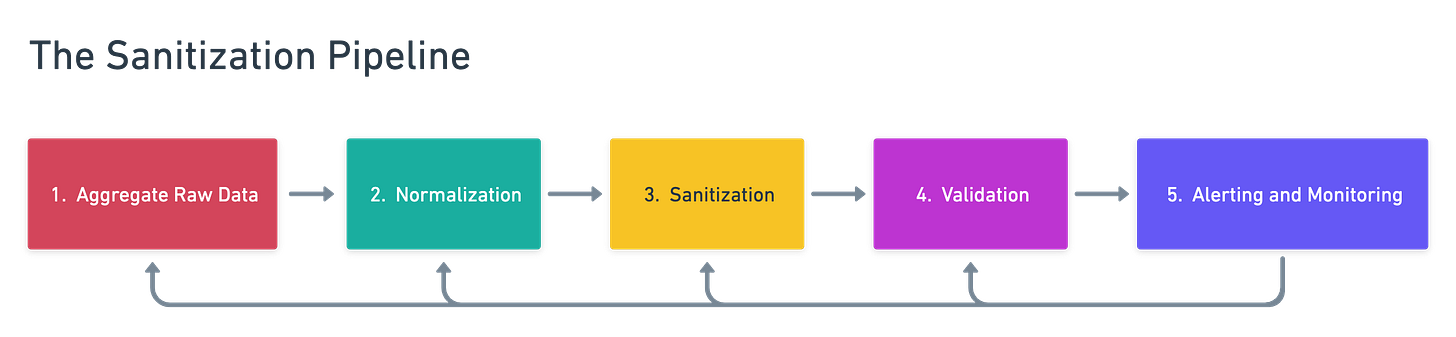

Five Steps

To architect a sanitization pipeline for unstructured, incoming data, we first break the process down into pieces. Specifically, we clean our data incrementally, in order of most simple (and least costly to implement) to most complex. This ensures we are optimizing our use of third-party tools for when we actually need them in the final data validation stages. No use reinventing the wheel!

Let’s examine each step individually.

Example: Pharmacy Prescriptions

Imagine you are a pharmacy technician responsible for fulfilling medication orders from two different clinics. The two clinics you partner with each use a proprietary prescription management system and each send you a uniquely formatted CSV of patient data.

We can see clear differences between these data sources. Casing, spacing, phone number formats, doctor naming convention, and drug names. Now let’s build this data sanitization pipeline!

Step 1: Aggregate raw, untrusted data

Oftentimes, particularly in healthcare, data is first entered into a system by a human via an ‘EHR’ or Electronic Health Records system. Examples of inputted raw data include freeform memos internal to a hospital system and intra-organization communications between a clinic and pharmacy. Different organizations and individuals have different standards for how to input data, which leaves plenty of room for ambiguity.

For instance, one doctor may accept international phone numbers for patients while another may not – the point here being that international phone numbers often have more than the ten digits that are characteristic of U.S. phone numbers.

The first step here is to pull all CSVs into one mega database, such as a PostgreSQL relational database, and funnel this into a table. Let’s call it patient_raw_input. We can then parse these entries into our code and run further sanitization logic onto this data.

Step 2: Normalization

By Normalization we mean the most basic transformations we can make to a piece of information to bring it to a standard format: removing hyphens, title-casing, and removing extraneous whitespace. These modifications are often feasible using built-in methods like Python’s: strip(), upper(), lower(), and replace().

In our example, we would:

Strip whitespace from all entries

Title-case all names

Remove hyphens, parenthesis, plus-es, and all other non-numeric values from the phone numbers

Upper case all medication names

The outcome looks something like this:

This step serves as pre-processing for the upcoming sanitization step. Say for these phone numbers, our next step wants to rely on length logic (10 digits in the case of U.S. phone numbers) as a proxy for identifying a valid phone number before further processing. While it is a relatively minor change, it is essential for improving data integrity down the line.

Step 3: Sanitization

The next step is sanitization. This is the second and more involved pass at validating data against common mistakes. Here we enforce stricter formatting guidelines and rules for data attributes. This step requires more work, which can seem pointless when we have third party tools available to us that can do this easily for us. It remains important however because it increases cost effectiveness by minimizing the number of API calls needed in the next step.

In the health space, relevant sanitizations might include ICD10 code, National Drug Code, and CPT code lookups. Names of medications, conditions, and medical procedures can also be validated within your codebase by comparing it against a running list of valid entries that is maintained internally.

Continuing with our pharmacy example, and specifically the phone number column, this entails removing the one-digit country code, then checking to see if the remainder of the phone number has a length of 10. We will then store a list of valid U.S. area codes into our Python program and compare the first three digits against this list. All entries that passed these steps are free to move on to the next step, and the ones we’ve rendered invalid are blocked from advancing into our master table.

Some simple but useful tools here may include Regular Expressions (RegEx), built-in methods that evaluate whether an entry is alphanumeric, or building out tables with all instances of valid entries to compare incoming data against. By doing so, we can ensure each piece of information is compliant with a standard format for all data throughout an organization.

Step 4: Validation

We’ve arrived at the most involved data processing step: rigorous validation. Here, we most likely want to employ an external, third-party API to verify the legitimacy of the data at hand. This is because third-party APIs present a wide range of offerings that would be incredibly time and resource intensive to build in-house. In our example this means using Twilio for phone number validation and the National Provider Identifier (NPI) API to verify prescribers.

For instance, if we wanted to validate a patient’s address, we could use the Google Maps API. Using this API, we could also fetch the elevation of a location, time zone, and nearby roads among other things to improve the quality of our product offerings.

Step 5: Monitoring and Alerting

The final step in this pipeline is monitoring and alerting. While this step may seem unrelated to the work of actual data sanitization, it is just as important. This is because the pipeline is not a singular, static concept. Rather, it’s cyclical and iterative – the goal is to create a tight feedback loop so that we ensure the data guidelines we’re enforcing are the most appropriate for the task at hand, and that any necessary improvements to this pipeline are immediately apparent so we can make improvements quickly.

In our pharmacy example, we would want to build alerting infrastructure to track what percent of prescriptions are fulfilled accurately. Our monitoring system should display the number of API requests made, corresponding patient information, and overall distribution of HTTP response codes to identify any issues. Let’s say that each patient demands its own API request, and that we’re making on the order of thousands of requests daily, each costing $1. You can easily see how malformed data with little monitoring can become very costly.

Some tools we could use in this example for constant monitoring and alerting include Slack bots, email notifications, dashboards built quickly in Retool, and PagerDuty for time-sensitive/high-priority alerts. These are all tools we use here at Athelas to drive down costs, intervene early when issues arise, and ensure maximal data integrity.

Summary

We hope you found this tutorial helpful! Enforcing data integrity across all levels within an organization is an important step in avoiding costly decisions down the road due to unnecessary third-party tool usage.

If you’re interested in our mission of making healthcare preventative or want to learn more about us, feel free to contact careers@athelas.com or apply directly on our careers page: https://www.athelas.com/careers.