⭕️ Managing Async Tasks with Celery

Executing, Scheduling, Monitoring, and Debugging Repetitive Tasks in Python

There comes a point in the life of every software company where asynchronous processing must be embraced. This could be due to time-consuming workloads, tasks that wait on responses from 3rd-party APIs, or operations where it makes no sense to have users wait for completion.

For example:

sending bulk emails

uploading large files

heavy image processing

3rd-party APIs that could experience downtime

Furthermore, one can always find some batch processing or periodic jobs that need to run asynchronously by design.

At Athelas, we use Celery to execute asynchronous tasks in a distributed manner. Example tasks include:

White Blood Cell detection on images captured by the Athelas One

Sending daily reminder texts to our patients

Crunching statistics around our databases every day, around midnight

Communicating with health insurance APIs that experience downtime

Why Celery?

We like Python, and Celery is the de-facto standard Python task queue. There are many other task queues available (RQ, dramatiq), but the most popular one by far is Celery. It’s been around for a long time, has an established user base, and supports different broker backends including Redis and RabbitMQ. It has a reasonable learning curve and is efficient, flexible, and easy to maintain.

Basic Concepts

The Broker

Celery supports different Brokers (or task queues). Our team has tried both Redis and RabbitMQ, but we saw a significantly improved performance and reliability with RabbitMQ.

Redis as a Celery broker currently has certain quirks that are being addressed in the open-source world. For instance, there are circumstances where one task can be dispatched and executed multiple times (read more about this issue here). This was not acceptable for us.

Executing Tasks

Celery has a pretty simple Python API. Below is an example of a Celery job that adds two variables and saves the values into a SQL database:

Now that we’ve written this task, we can run it like so:

The addition and database insertion will be performed in the Consumer (Celery) Node in an asynchronous manner so that the Producer Node does not have to wait for its completion before proceeding.

Real-World Tips and Tricks

We’ve found that even though Celery tasks are simple to run, it’s difficult to manage task failures. We’ll sometimes encounter tasks that error out mid-execution (accounting for rollbacks & re-runs). Here are some of the lessons we’ve learned to prevent errors and mitigate operational risks.

Retrying Tasks

Celery tasks can fail for several reasons, so it’s a good idea to write tasks in a crash-safe way. If our Celery task is retryable, then it’s easy to recover from task failure. And for a task to be retryable, it must to satisfy two properties:

Atomicity : In an atomic task, either everything succeeds or everything fails. We can think of this as similar to a

database transaction: either the entire transaction goes through or none of it gets written. The database will never be in a state where only half of the rows in the transaction are updated and the other half are not.Idempotent: If we can apply the same task multiple times without changing the result beyond the initial application, then this task is idempotent. For example the absolute value function

abs(x)is idempotent sinceabs(abs(x)) = abs(x), so the operation can be applied infinite times with the same result after its first application.

Let’s look at this example task where we send emails to a provided list of user_ids:

The task above is neither Atomic nor Idempotent:

It is not atomic because if this task fails in the middle of execution, some users will receive the email and others will not

It is not idempotent because running this task multiple times will cause the users to receive multiple emails

Let’s see how we can improve this task:

We have made two changes to the flow:

Delegating the actual email sending to another task. This way, if one email sending fails, it will only affect that user and not others

Recording the email send event in our database so that we don’t double send emails to the users in case of task failure

Monitoring and Alerting

It’s critical to set up monitoring and alerting on asynchronous tasks since these they might be dealing with things that indirectly impact the user experience. Therefore, it is imperative that we capture errors early and alert our software team if necessary.

Logging is important for Celery tasks. We try to log as much as we can because there is no user interface for debugging these tasks.

We use Flower to monitor Celery tasks in real-time. Flower provides a nice UI where we can search for tasks, workers, completion times, what’s currently running etc. It’s a must-have when working with Celery async tasks.

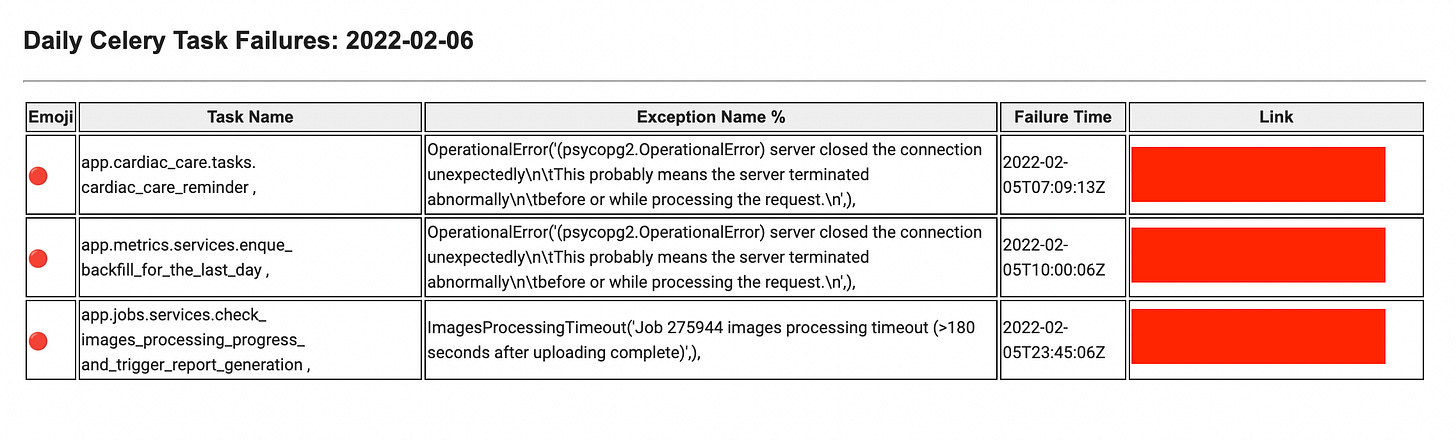

It’s good to be alerted when tasks fail repeatedly, for instance due to Out Of Memory errors or simply code bugs. We’ve set up a custom workflow to alert our engineering team of such failures. This is accomplished by setting up another app called

celery-monitorand using the Task-Events API provided by Celery (example found here).

We use the PagerDuty events API to trigger an alert upon task failure. By default, if multiple incidents are triggered by the same underlying issue, the team would be notified for each duplicate incident. We can instead group these issues by a

dedup_keyconsisting offailed_task_name + task_failure_hour. With thisdedup_key, our team is only alerted once per hour if some task repeatedly fails. We’ve also set up a daily report email to summarize these task failures.

PS: Here’s a useful checklist for building great Celery tasks.

Summary

Celery is a powerful asynchronous task queueing system that we use extensively at Athelas. We hope that this post helped you understand a bit more about where it shines, and what to look out for.

If you’re interested in building the future of healthcare or are curious to learn more about our tech infrastructure, feel free to contact us at careers@athelas.com or apply directly on our careers page: https://www.athelas.com/careers.