⭕️ Six Ways to Optimize SQLAlchemy

Level up your usage of the most popular Python SQL Library

At Athelas, we support 40,000+ patients across the world with integrated medical devices that relay millions of data points to our servers every month. To reliably process and store this data, we’ve built a backend data system powered by PostgreSQL. And we interact with our data models through SQLAlchemy.

💡 Note: If you’re new to SQLAlchemy, we recommend that you check out the official website at https://www.sqlalchemy.org/ for a quick introduction to what it is, and why people use it.

When any business scales, API performance tends to degrade (and with that degradation, the business must adapt!). As we’ve scaled our healthcare operations, we’ve made some major and minor adjustments to our data infra to significantly speed up our products and services. Here are six methods for speeding up your SQLAlchemy interactions:

1. Load Fewer Fields

If the class you’re pulling from has large text or binary fields, you can avoid pulling those extra fields by loading only the columns you need for your use case with the load_only option.

For example, let’s assume we have a Book class with three fields:

Now let’s say you want to show a preview of the contents of the book with just the title and summary, it would be overkill to load in the full_content (as it would significantly slow down the UI to do so). Instead, you can choose to only load the summary and title as follows:

The payload will be smaller and your query will run faster.

2. Skip the ORM

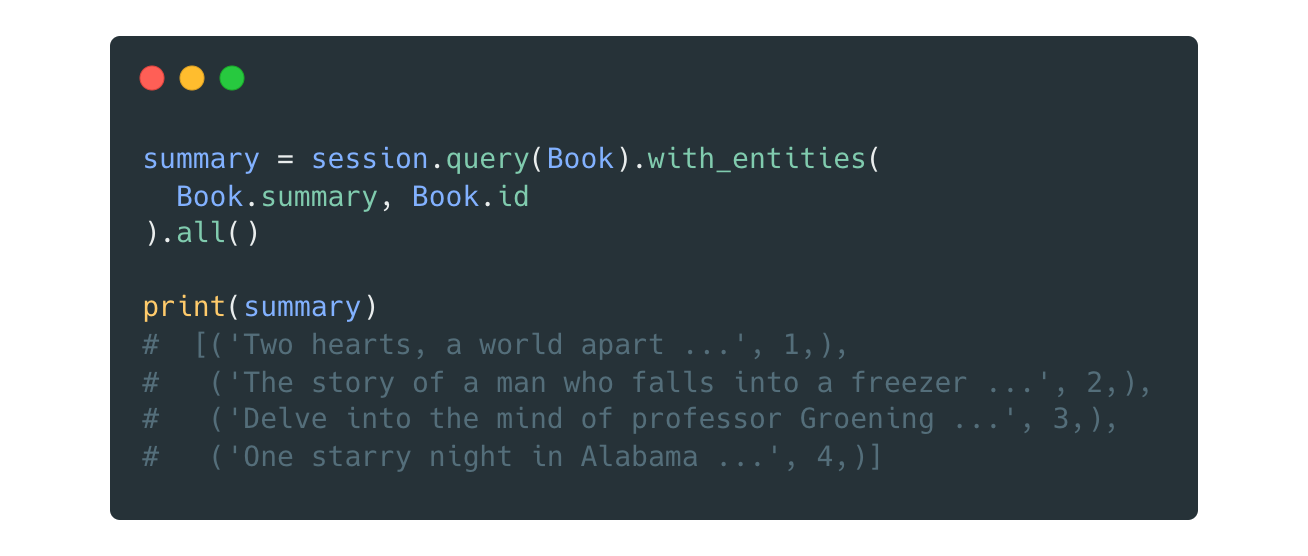

Assuming you know exactly which fields you need to extract and don’t require the convenience of ORM down the line, you could use the with_entities processor to skip the ORM and get the data you’ve requested as a tuple!

This return type will be a list of tuples, and we need to access them based on the sequence. For example, result[0][0] will be the summary of the first book returned by the query above.

Make sure to enforce some sort of ordering with an order_by() clause for your behavior to be deterministic. Otherwise, you’ll encounter flaky behavior.

3. Lazy loading at Mapping

Lazy loading (a.k.a. on-demand loading) is an optimization technique available in SQLAlchemy. You can set certain class attributes as lazy=True and this will prevent that attribute from loading instantly upon object instantiation.

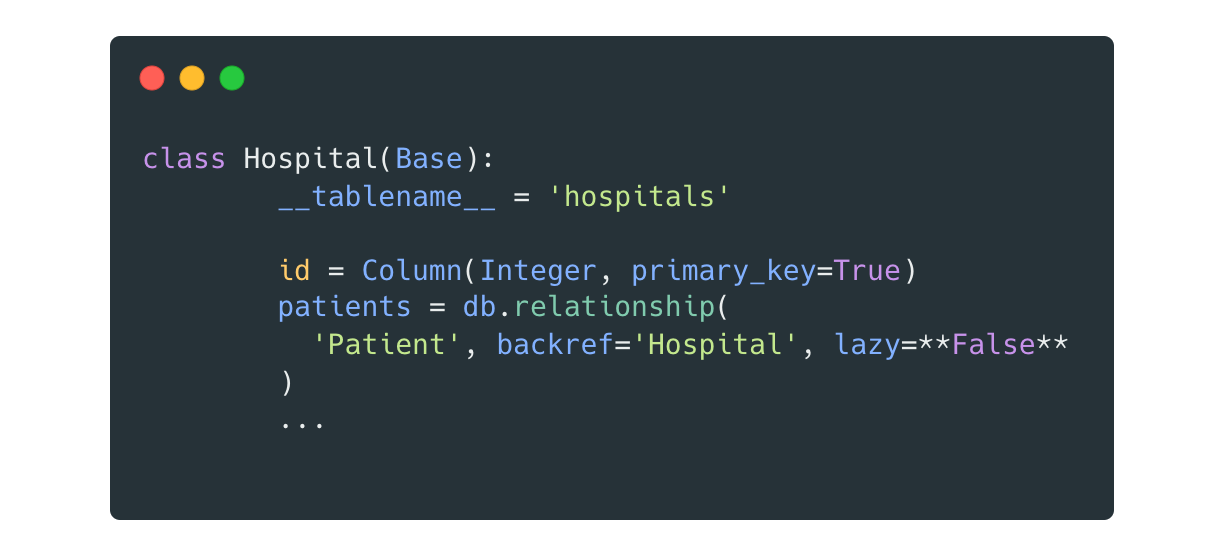

For example, imagine you have a class named Hospital, and the Hospital class has a thousand Patients associated with it.

When we map the relationship between Hospital and Patient, we may want to make it lazy loading with something like this:

Now, every time you pull a Hospital object (e.g. Hospital.query.get('1923')) it will not automatically also load the thousand associated Patient objects.

4. Eager loading at Mapping

Eager loading is considered the opposite of lazy loading - and there are times that eager loading is quite useful. When an attribute is eagerly loaded, that means that you are making an active relation between two objects. For example, taking the Hospital and Patient example from Method 3, if we instead eager loaded all the patients onto the Hospital object, then it would look something like this:

Now, whenever you pull a Hospital object, all the Patients are auto-loaded so you can access the list of a thousand patients via the hospital.patients without any additional querying.

5. Write Raw SQL

As flexible as SQLAlchemy may be, you can always forego the ORM and write raw, performant SQL queries on your own while leveraging the convenience of its connection and serialization.

For example:

You can then access all the Hospitals that are loaded into the results array.Note that this returns a list of tuples, and the order of those tuples corresponds to what was queried.

💡 Caveat emptor! If you’re not careful, you could open yourself up to SQL injection attacks. Always sanitize your inputs. Relevant XKCD: https://xkcd.com/327/

6. Add indexes

Indexes are the bread and butter when it comes to speeding up your queries - it is the perfect example of trading space for time.

Commons indexes are B-Tree for most data types and GIN for JSONB. In our specific use case, we do a lot of ilike operations on names and we find the GIN index with gin_trgm_ops useful.

Let’s assume we have a User class, with first_name and last_name as attributes, and we’d like to speed up the ilike queries on those fields.

We could write something like this:

The index will change ilike queries plans from sequential scans ( O(n) time) to a bitmap heap scan (O(log(n)), which speeds up queries by 40-80% depending on the use case and the amount of data.

In Summary

SQLAlchemy is a powerful library that can be used by engineering teams small and large alike to quickly and systematically deploy scalable backends. Though easy to set up, as databases scale, basic implementations of SQLAlchemy may not lead to optimized experiences for end-users.

In order to address this, you can follow a couple of core principles to continue using SQLAlchemy as you scale:

Only load the data you need (

load_only,with_entities)Properly set up data classes (eager & lazy loading)

Use Indexes for optimizing query time

Our engineering team is still small and we are all actively thinking about ways to optimize our services to provide the best experiences to our customers: doctors and their patients. If you’re looking for a new gig in a fast-paced, electric environment, Athelas is the place for you!

Join our team now by emailing us at careers@getathelas.com or applying on our Careers Page.

Happy querying!

Real SQL? Or Microsoft borrowed SQL?

😉

Thank you a lot